Los objetivos de este entregable D4.4 se relacionan con el análisis posterior del aprendizaje basado en AR y la biorretroalimentación, basados en la captura de datos de múltiples sensores durante las tareas compatibles con AR. Esta coincidencia de datos incluye varios componentes básicos, como escaneo 3D, posición de la cabeza, video del área de visión y voz. Todos estos datos deben poder ser revisados e interpretados después de la acción a nivel de escritorio por científicos de datos y analistas de tareas/rendimiento, así como por usuarios finales, p. utilizando las Hololens.

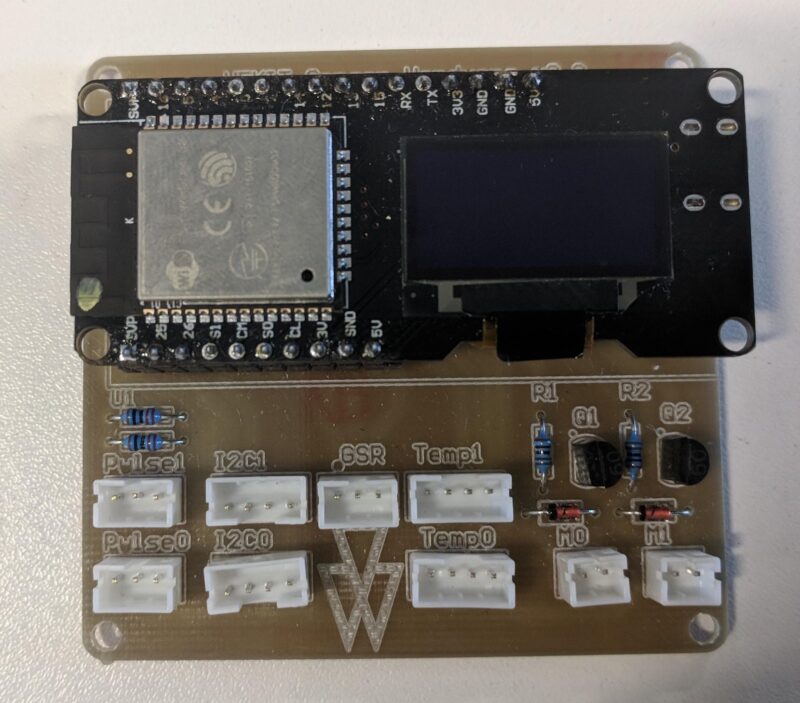

Los objetivos anteriores se cumplen en este entregable utilizando instancias de captura de datos en nuestras pruebas de usuario final de la plataforma prototipo WEKIT y el ecosistema de sensores vinculados («la prenda»). Los datos de prueba se recopilan utilizando tres categorías de sensores. Estas categorías son sensores humanos, ambientales y de dispositivos. Integrados en la prenda hay sensores para detectar la actividad cardíaca, Galvanic Skin Response, actividad electromiográfica para el antebrazo y la mano, seguimiento de posición, movimiento inercial de 9 ejes, así como temperatura y humedad. La construcción del ecosistema de sensores tiene que relacionarse con la arquitectura propuesta en D2.1. El ecosistema de sensores debe integrarse con el diseño de la prenda prototipo portátil cubierta en el entregable 5.8, así como con la arquitectura electrónica final (D3.5) y la visualización de los datos de los sensores cubierta en D5.10.